RECENTLY there has been much debate about the need to measure things, how to store and access data, how to interpret data, how to make decisions from data and how to influence policy from data. There creeps into these discussions’ questions of science and whether it is advancing or impeding progress.

First a brief history lesson on the scientific process, without going back too far. In the 1620s Sir Francis Bacon set out the scientific method of hypothesis, testing the hypothesis by experiments and coming to some conclusion based on the results.

In the 1840s Lawes and Gilbert at Rothamsted set up the first long term experiments into measuring outputs of cropping experiments. Fisher around 1925 developed statistical methods to interpret the data and account for variability. These statistical methods underpin most of what we use today in algorithms with our smart phones and search engines but also the methods we use to interpret experiments and come to some conclusion about the results of the experiment. We have accepted probabilities that the result is real and not due to chance i.e. P<0.05 that you see in scientific papers means that <5% of the time the result could be due to chance. There is never one truth but rather a probability that a certain result is different to other results and so either supports or rejects our original hypothesis.

But people search for truth or a universal law and that is not how science advances. A person advances a hypothesis or idea about how something works, does an experiment, and concludes that the idea holds or is rejected. Other people do similar experiments or variations on the idea and gradually a pattern emerges that supports a general idea of the phenomenon or identifies exceptions. The exceptions often prove more interesting than the original idea. Scientists can differ over their interpretation or the original idea and that is a good thing. Einstein grumbling that God does not play with dice over Niel Bohr’s idea of quantum physics and the composition of an atom has led to modern quantum physics and Bohr was shown to be right in suggesting a new idea for atomic structure.

An open-air device measures methane in cattle’s breath as they eat. Research has found a small amount of seaweed can significantly reduce enteric methane emissions. (Breanna Roque/UC Davis)

John Black, an eminent Australian scientist, lamented the decline of statistics and rigour in experimental design and statistical methodology. We all know the refrain “lies, damned lies and statistics”. However, if we do not have a rigorous framework for designing and analysis of data then we are faced with fake news or statements that cannot be challenged. There is a lot of that around these days.

The argument advanced here is that we need to do things properly, with rigour and be assessed by proper peer reviews in established journals if we are to rely on statements from various groups and influence policy. The data need to be collected by accepted methodology and rigorously reviewed by others in a peer review process and be open and accessible to other groups for further investigation.

Bad policy works in an environment where statements cannot be challenged. Data need to be available and open to challenge either in measurement or in interpretation. This was very evident in a paper by Ederer who outlined the bad science behind statements about red meat and its health and environmental dilemmas by distinguishing between scientific truth and scientific evidence, the latter being preferred. He was one of the driving forces behind the Dublin accord to enlist animal scientists to support a data driven rigorous approach to assessing the role of animals in society.

Pethick and colleagues described the situation as “Advances in the nutritional sciences, agriculture, animal production and agronomy are based on quantification. The quantitative evaluation of evidence is the core strength of the scientific approach used by these disciplines and has been responsible for major gains in food production efficiency over the past century. However, public debate around complex societal challenges may at times be conducted without a strong quantitative base, often leading to suboptimal outcomes in understanding, legislation, and behaviour change”.

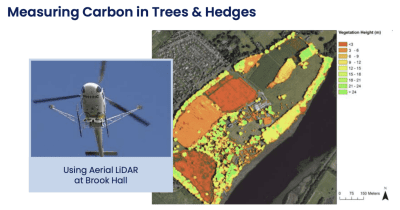

On his farm in northern Ireland John Gilliland uses LIDAR laser measurement technology to create a precise register of all the carbon that exists both above and below.

Recently in Beef Central, Gilliland outlined the need to measure C stores accurately and to quantify the current state of farms with respect to C storage. An important message was to measure things accurately with accepted methodology and to then interpret whether farms in Ireland were or were not storing C and the differences between systems. There was no preconceived idea. The cards fell where they fell and hoping that your system might be better or feel good was not an option.

One of the challenges faced in extensive grazing lands and undertaking experiments on pasture and animal production and landscape attributes such as carbon storage is that these systems are highly variable, both spatially and over time. Large climatic variability from year to year can make it challenging to interpret findings i.e. there is a lot more “noise” and the signal can be harder to find. For example, climate variability can have a stronger effect on soil carbon than grazing or vegetation management so teasing apart these influences can be tricky, especially when it is tied to formal credit schemes.

An important approach to overcoming some of this variability and developing more rigorous conclusions is to undertake long term experiments. Unlike the Rothamsted experiments, some of which have been running for over 100 years, Australia has been loathe to invest in long term experiments.

Two of these of which we are familiar in the north have yielded results way beyond their original expectations. We refer to Orr’s experiment with grazing on Mitchell grassland at Toorak research station and O’Reagain’s experiment at Wambiana, Charters Towers both of which received modest funding and required inventive ways by the chief scientists to keep running. These are the types of experiments needed to understand the dynamics in complex ecosystems and provide reputable data, gathered in a statistically appropriate manner with data freely available to be assessed by any scientist of any ilk. They will stand us in good stead to provide information to policy makers about the sustainability of our practices or otherwise. We need to change if the data show otherwise.

An important change in experimental approach over the last 50 years has been to conduct experiments in commercial grazing settings and, where possible, at spatial scales relevant to management. Even though this can be more challenging in terms of costs, managing and understanding variability and in collecting data, it offers distinct advantages in relevance and in being able to more effectively communicate and extend results. It also provides a good setting for combining producer knowledge with the more traditional scientific method. This approach also lends itself to benchmarking amongst like production groups which provides one of the most powerful tools for change between producers. It can also provide space for producer ideas and innovations to be tested in partnership with the scientific method.

Core samples being taken in a paddock to measure the amount of carbon in the soil. Image: Carbon Link

Another approach where there is uncertainty in the experimental evidence is to collate the findings of a number of studies and undertake a review or meta-analysis. A good recent example of this related to the uncertainties associated with the role of management in soil carbon mentioned above is the paper by Henry et al. Thirty-eight studies of climate and management effects on soil organic carbon were reviewed, which provided sufficient confidence in the evidence to reach a number of clear conclusions that should aid decision-making in policy and management, and provide priorities for future research.

If we do not start measuring the things important to society such as C balance, methane output, animal welfare, tree clearing, quality of product we will not have the social licence to operate. If we continue to attack the scientific process because it does not give us the results we want, then we will lose out. The scientific process and modern agriculture have evolved together in a mutually beneficial process. Science may move slowly and is perhaps not as definitive as we would like, but getting it right is important. Ensuring that long term measurements are in place, that they use acceptable methodology and that the data are freely available will enable us to keep the social licence under which we operate.

Authors

Dennis Poppi is an Emeritus Professor of Animal Nutrition at the University of Queensland, Gatton Campus. During his career he has undertaken extensive research, extension and teaching on ways to improve beef production systems in Australia and overseas.

Andrew Ash has recently retired as a Chief Research Scientist in CSIRO Agriculture and Food. He has 35 years research experience in tropical rangelands and agricultural systems in northern Australia and south-east Asia with a particular emphasis on developing management systems to improve profitability and environmental outcomes for rural communities.

Ian McLean is the Managing Director of Bush AgriBusiness Pty Ltd, an advisory firm providing independent analysis and trusted insights to the Australian Pastoral industry. He is an advocate for the application of science and economics to agricultural businesses.

(please address any communication to Ian McLean)